If you work in compliance-heavy industries like insurance, real estate or healthcare, you’ve probably felt the tension building over the last few years.

On one hand, sales conversations are becoming more complex. Regulations are tighter. Customers ask smarter questions. Managers need to provide better coaching, better call scoring, and have better insight into what sellers actually say on the phone.

On the other hand, every new tool for handling call analysis raises the same question:

“Is this compliant or just another risk waiting to happen?”

In this article, we will analyze where most compliance risks actually come from, why sales teams might unknowingly expose sensitive customer data, and how a real-time, PII-masked approach to call analysis changes the game entirely.

When we think about compliance in sales, we typically focus on what sellers say during calls. Did they disclose the required information? Did they follow the script? Did they avoid prohibited language?

But there's a massive compliance gap that most organizations completely overlook: what happens to the call data after you analyze it through external AI tools?

When you paste a call transcript into ChatGPT, you're not just getting analysis—you're contributing to the training of future AI models.

OpenAI's public tools use your inputs to improve their systems. That conversation containing a date of birth, policy number, and health conditions? It becomes part of the data pool that trains tomorrow's AI models. Your customer's private information doesn't just disappear after you close the chat window, it gets absorbed into the broader system.

For industries governed by regulations like HIPAA, GLBA, state insurance codes, or real estate privacy laws, this practice is potentially catastrophic. You're taking information that your customers trusted you to protect and feeding it into a system designed to learn from it and reuse it.

But here's what makes this particularly insidious: it doesn't feel dangerous. There's no warning label. No pop-up asking "Are you sure you want to upload protected health information?" The interface is friendly and helpful, which makes it easy to forget that you're transmitting sensitive data across the internet to a third party with its own business interests.

There’s another risk that often flies under the radar: most generic transcription tools were never built for compliance-heavy industries in the first place. They’re designed for speed and convenience, not regulatory realities. As a result, they don’t reliably detect or mask personally identifiable information. Policy numbers, dates of birth, addresses, medical details—all of it ends up fully exposed in plain text transcripts that get stored, shared, and analyzed as-is. Once that data exists in an unredacted form, it becomes another liability surface you no longer control. This is why “just transcribing the call” is rarely just that in regulated environments.

For teams operating in regulated environments, this creates a simple but uncomfortable reality: you can’t bolt compliance on after the fact. It has to be handled automatically, in real time, by a system designed from the ground up to prevent sensitive data from ever being exposed in the first place.

SellMeThisPen.ai is designed with privacy at its core. We built our approach around a simple principle: sensitive data should never exist in a form where it can be exposed, stolen, or misused. Our platform automatically detects and masks sensitive personal information in call transcripts, ensuring your customer data stays protected.

Let us walk you through exactly how we handle your customer data, and more importantly, how we don't.

We don't store PII (personally identifiable information). Not in encrypted form. Not in a secure database. Not anywhere.

When your team uses our platform, any PII that appears in a conversation is identified and masked. The original, unredacted version never touches our storage systems because that sensitive data never exists in a storable form in the first place.

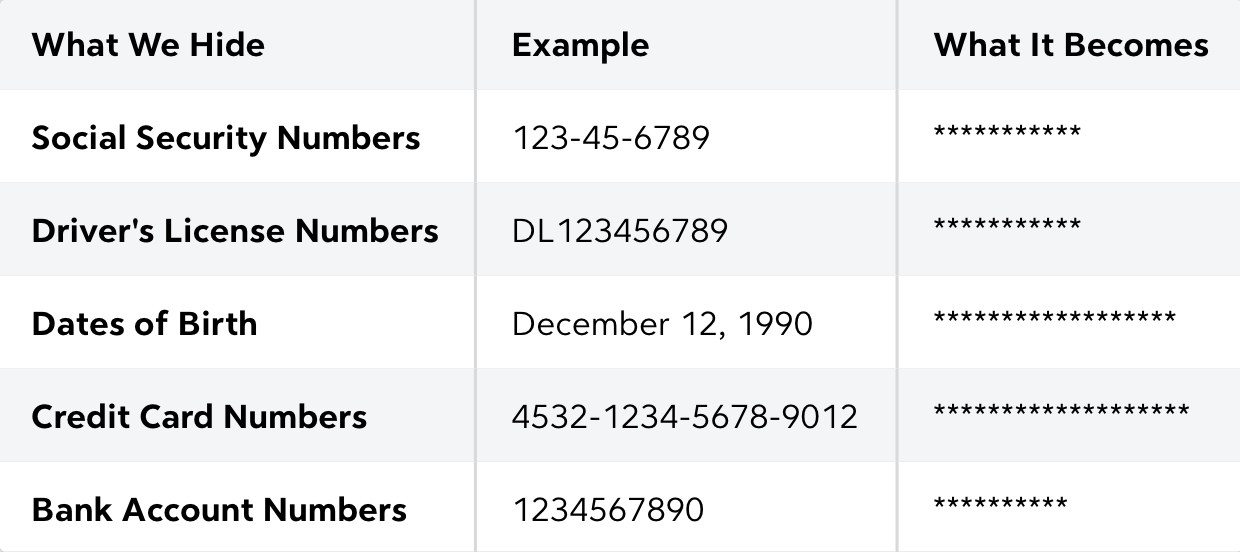

Think of it like this: if you or anyone checks any data we store, you won't find Social Security numbers, credit card information, or medical details. You'd find masked placeholders where that information used to be. The original data was discarded the moment it was processed.

When your sellers use our real-time assistance features through supported dialers, we need to process audio to generate live transcriptions. But here's the crucial difference: we never record or store that audio.

The audio stream is held temporarily in memory—just long enough to create a transcript. The moment transcription is complete, the audio is discarded. It's not saved to a file. It's not backed up. It doesn't exist anymore.

If we don't have the recording, we can't accidentally expose it. We can't have it stolen in a breach. We can't be compelled to turn it over in a legal dispute.

All our processing happens inside a private Microsoft Azure OpenAI instance—not OpenAI's public environment. This distinction is crucial. Your data never touches the public OpenAI API. Microsoft operates Azure OpenAI as a completely isolated service within their cloud, and the data path never leaves Azure's controlled environment.

Why does this matter? Because Azure OpenAI runs within Microsoft Azure's PCI-DSS Level 1-compliant cloud infrastructure. This is the highest level of payment card industry compliance—the same standard required for processing credit card transactions. Our platform inherits Microsoft's compliance posture, which means we benefit from enterprise-grade security and privacy controls.

*You can also find Microsoft’s official statement on Azure OpenAI data handling here: https://learn.microsoft.com/en-us/azure/ai-foundry/responsible-ai/openai/data-privacy*

After the real-time masking process completes, we save exactly one version of your transcript: the fully redacted one.

Meaning that dates of birth, account numbers, addresses are all replaced with clear placeholders that preserve the flow and context of the conversation without exposing the actual sensitive information.

This approach gives you the best of both worlds: you can review, analyze, and learn from your calls without putting customer data at risk. Your managers can coach on technique, compliance adherence, and sales effectiveness—all without ever seeing someone’s actual information.

Remember that concern about ChatGPT using your inputs for training? That doesn't happen here.

We do not use your data—transcripts, scripts, roleplays, custom content, or anything else you create or upload—to train or improve AI models. Not our models. Not anyone else's models.

The AI services we use through Microsoft Azure come with contractual guarantees about data handling. Your information isn't fed back into model training. It's not used to improve future versions of the AI. It's not analyzed for business intelligence purposes. It's used for exactly one thing: providing you with the analysis and coaching tools you requested.

Your scripts, roleplays, custom training materials, and workspace content belong to you. Not to us. Not shared ownership. Not licensed to us.

You maintain full ownership and control. You can export it. You can delete it. You can take it with you if you ever leave our platform.

We're a tool you use to improve your team's performance. We're not claiming ownership over your intellectual property or your coaching methodologies.

Here's our final safety mechanism, and it's possibly the most important one: when our system cannot confirm complete masking, the transcript is removed entirely.

Most platforms would give you the partially masked transcript with a warning. We don't. If we can't guarantee that all sensitive information has been properly protected, we delete the entire transcript rather than risk exposure.

We prioritize data protection over data availability. Always.

You might lose the coaching opportunity from that one call, but you'll never face a compliance violation because our system gave you a false sense of security.

If you work in insurance, real estate, or healthcare sales, you already know that compliance isn't optional. A single data breach or privacy violation can result in:

But beyond these obvious concerns, there's a competitive reality: your customers are getting smarter about data privacy. They ask questions about how their information is handled. They read privacy policies. They choose providers who take their data seriously.

When you can honestly tell a prospect, "We never store your personal information—not even in encrypted form. It's masked in real-time and the original data is immediately discarded," you're not just checking a compliance box. You're demonstrating a level of respect for their privacy that builds trust.

If you're currently using another call coaching or analysis solution, we recommend asking these questions:

The answers might surprise you. Many providers can't give you clear, confident responses to these questions because their systems weren't designed with compliance as the foundation; it was added as an afterthought.

Improving your team's sales performance shouldn't require gambling with customer data. Coaching your sellers shouldn't mean choosing between effectiveness and compliance.

The technology exists to do both - to provide powerful AI-driven insights while ensuring that sensitive information never exists in a vulnerable form. It requires careful architectural choices, the right partners and an unwavering commitment to putting data protection first.

Your customers trusted you with their information when they agreed to that sales call. They trusted that you'd handle it responsibly, protect it appropriately, and use it only for the purposes they authorized.

That trust shouldn't evaporate the moment the call ends and the coaching process begins.

In this article, we've analyzed the hidden compliance risks in common call coaching practices and shown you what real data protection looks like in action. The choice is yours: continue with approaches that create unnecessary vulnerabilities, or demand better from your tools and partners.

If trust matters to your business, the architecture behind your tools matters even more.

For any questions regarding our security practices and compliance frameworks please reach out to help@sellmethispen.ai